Grab Deal : Flat 30% off on live classes + 2 free self-paced courses - SCHEDULE CALL

Transfer learning is a fascinating area of research in data mining that enables you to apply previously taught machine-learning models to address brand-new issues. You can speed up the learning process and enhance generalization in several applications, such as natural language processing and computer vision, by using the weights of a network from one project and applying them to another. This blog will explore several topics including, what is transfer learning, practical uses for transfer learning, go deeper into its theory, and discuss its advantages. Understanding transfer learning in data mining begins with understanding data science; you can get an insight into the same through our Data Science training.

A machine learning model that has previously been trained can be utilized in transfer learning to address a different problem that is nonetheless connected to the original problem. Suppose you train a basic classifier to determine whether or not an image contains a backpack, for example. In that case, you can apply the model's learned object recognition skills to additional categories, such as sunglasses. For example, if you train the model to determine whether or not an image contains a backpack, you can train it to determine whether or not an image contains sunglasses.

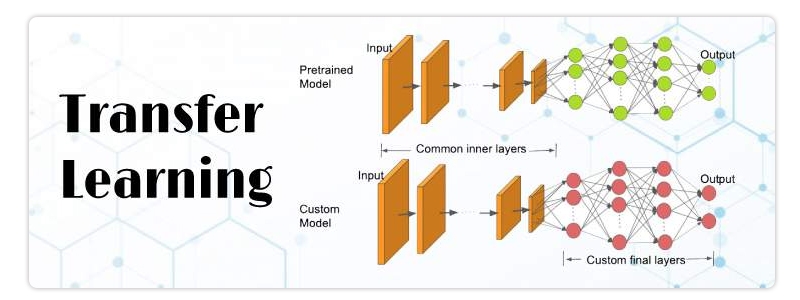

Transfer learning aims to use the skills acquired in one profession to improve generalization in another. We take the weights of a network from one project, which we'll refer to as "task A," and apply them to another job, which we'll call "task B."

The fundamental idea is to apply the knowledge that a model has gained by completing one job with an abundance of labeled training data to another task with a limited amount of data. We will use the patterns we have already developed while working on analogous tasks to accelerate the learning process. Transfer learning is generally used in applications involving natural language processing and computer vision, such as sentiment analysis. This is due to the massive amount of CPU power necessary for transfer learning. Transfer learning may be thought of as a "design process" in the same vein as active learning, despite the fact that it is not officially a machine learning method. Additionally, it is not something that can be learned or researched exclusively via the use of machine learning. On the other hand, when combined with data- and computation-intensive neural networks, it has found broad use.

1. Teaching a Model to Perform the Task

Let's pretend you need to complete job A, but there isn't enough information to train a deep neural network. One workaround is to look for Task B, which is similar and has more data. You may utilize the model created by training the deep neural network on task B as a jumping-off point for tackling task A. The problem will determine how many layers of the model will be necessary to solve it, if any. Reusing the model and predicting the new input is possible if you have the same input for both jobs. Alternately, you may modify the task-specific and output layers and then retrain the network.

2. Applying An Existing, Priorly-Trained Model

The second strategy involves employing a model that has previously been trained. You should look into the many models available, as there are many. It's problem-specific as to how many layers to reuse and how many to retrain. If you want to do things like transfer learning, prediction, feature extraction, and fine-tuning, Keras has a tonne of pre-trained models you can employ. These models, along with some quick guides on how to put them to use, are available on this page. Furthermore, many academic organizations share trained models with the public. This form of transfer learning is widely employed in the deep learning community.

3. Extracting Features

Deep learning is another option since it may help you uncover the most relevant features from which to build a good representation of your problem. In many cases, the performance of a model trained with this method can be significantly higher than that of a model trained with a manually created representation. Learning Strategies Can Easily Be Transferred in the field of computer vision, for example, neural networks will frequently attempt to recognize edges in the first layer, shapes in the second layer, and certain features relevant to the job at hand in the third layer.

The first three layers are used during the transfer learning process, and only the top two are retrained. Utilizing the labeled data generated by the trained model used for the initial job is beneficial. In this particular instance, we will use a model trained to identify a bag in an image to identify sunglasses. Since the model has already been taught to recognize items, all that needs to be done to teach it how to identify sunglasses and distinguish them from other objects is for the deeper layers of the model to be retrained.The purpose of transfer learning is to prepare a model to do a new task by using as much of its previous experience as it can muster from performing the target activity. The nature of the problem and the readily available information have a role in determining the format that this knowledge will take. The method by which we construct our mental models enables us to recognize new objects.

Transfer learning has emerged as a powerful tool in the field of machine learning, allowing models to learn from existing data and adapt it to new tasks. Effective transfer learning strategies can improve model accuracy, reduce training time, and save resources. One such strategy is fine-tuning, where pre-trained models are modified by retraining them on task-specific data. Research has shown that fine-tuning can significantly improve performance in various natural language processing (NLP) tasks, such as sentiment analysis and text classification. Another effective approach is feature extraction, where lower-level features learned by pre-trained models are used as inputs for new models trained on specific tasks. This method has been successful in image recognition applications like object detection and face recognition. Additionally, domain adaptation techniques can be used when dealing with different domains or datasets to make transfer learning more efficient. By leveraging these techniques and appropriate evaluation metrics for the target task, developers can effectively apply transfer learning to achieve better results while reducing costs and development time.

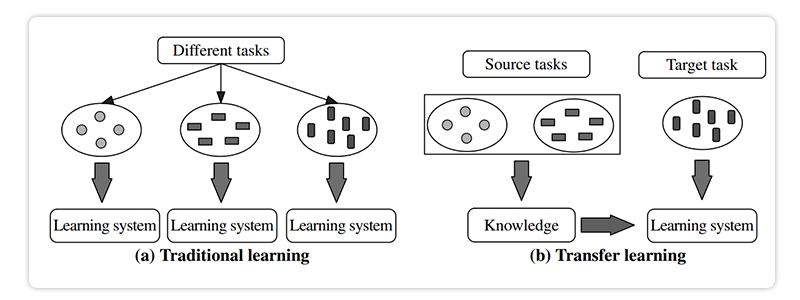

The purpose of transfer learning is to gain proficiency in a target task by acquiring proficiency in one or more source activities. Our example employs the categorization of camera reviews as the source job and the classification of television reviews as the target task. Figure 1 shows the differences between the more conventional approaches to education and transfer learning.

The standard approach for each new classification job is constructing a new classifier using the existing class-labeled training and test data. Transfer learning algorithms aim to construct a classifier for a new (target) problem using prior information from the source job. The resultant classifier may be built with less time and data used in training. The training and test data in conventional learning methods are assumed to come from the same distribution and feature space. Therefore, such techniques require a complete model rebuild if the distribution changes.Training and testing datasets come from distinct distributions, have different tasks, and even be in other data domains when utilizing transfer learning. Humans, too, may benefit from transfer learning by applying what they've learned about one activity to the process of mastering another. Playing the recorder may help us learn to read music and understand musical notation, which can then be used to learn the piano. The same is true for the reverse: understanding Spanish could simplify learning Italian.

When data becomes obsolete or the distribution shifts, transfer learning is beneficial for widespread applications. Here are two additional instances of this. Take, for instance, the problem of web-document categorization, where we may have educated a classifier to tag newsgroup posts according to categories we've established in advance. Due to the dynamic nature of online content, the web data used to train the classifier risk becoming stale. Spam filtering in electronic inboxes is another domain where transfer learning has shown usefulness. Using a collection of users' emails, we might train a classifier to identify spam and non-spam messages. When new users join, the distribution of their emails may change from the initial group, necessitating an update to the previously taught model. Let's dive more into the topic of transfer learning and learn more about its importance in data science and key takeaways. You should check out the data science tutorial guide to understand your basic concepts.

Transfer learning refers to using an existing weight matrix for a particular architecture. This is can be used in numerous scenarios:

Small Datasets

One common scenario where transfer learning is handy is dealing with small datasets. Training deep neural networks requires large amounts of data; however, collecting such data can sometimes be expensive or impractical. Pre-training a model on a larger dataset before fine-tuning it on your smaller dataset can help overcome this challenge.For instance, let's say you want to build an image classifier for detecting different types of flowers but only have 100 images per class. Instead of training your model from scratch with these limited samples (which may lead to overfitting), you could start with a pre-trained model like VGG16 trained on ImageNet (a large-scale image database). You would fine-tune the last few layers specific to your flower classification task using your limited dataset.

Similar Tasks

Another scenario where transfer learning works well is when dealing with similar tasks with common features or patterns. For example, if you have already trained a speech recognition system for English language audio files and now want to develop one for French language audio files - instead of starting from scratch again - you could use the same architecture and pre-trained weights of the English model as a starting point. This is because both languages share similar phonemes, which are the basic units of sound.

Limited Computing Resources

Training deep neural networks can be computationally expensive, especially when dealing with large datasets or complex models. In such cases, transfer learning can help reduce training time and computational resources required to train your model from scratch.

For instance, let's say you want to build an object detection system using Faster R-CNN (a popular object detection algorithm) on a limited computing resource like a laptop or Raspberry Pi. Training this model from scratch would require significant computation power and time; however, you could use a pre-trained Faster R-CNN model trained on the COCO dataset (a large-scale image recognition database). You would then fine-tune it for your specific task using transfer learning techniques.

Transfer learning is a powerful technique in machine learning that allows models trained on one task to be reused for another related task. This approach has gained significant popularity due to its ability to improve the performance of deep neural networks when data is limited.

One example of transfer learning can be seen in image classification tasks, where pre-trained models such as VGG16 and ResNet50 have been shown to achieve state-of-the-art results on new datasets with minimal training. For instance, researchers at Stanford University used transfer learning techniques to classify skin lesions with high accuracy using a pre-trained InceptionV3 model. The use of transfer learning has also extended beyond computer vision applications and into natural language processing (NLP) tasks such as sentiment analysis and text classification. Overall, the success of transfer learning highlights the importance of leveraging existing knowledge from previously learned tasks for improved performance on new ones.

Data Science Training For Administrators & Developers

Transfer learning is a powerful technique that enables us to build accurate machine learning models even when dealing with limited amounts of labeled data. It allows us to leverage knowledge learned from one task and apply it to another without starting from scratch. With the availability of pre-trained models and Python libraries like TensorFlow or Keras, transfer learning has become more accessible than ever before. By understanding the benefits and applications of transfer learning, data scientists can improve their model performance while saving time and resources. Join a self learning data science training course to understand transfer learning better.

Basic Statistical Descriptions of Data in Data Mining

Rule-Based Classification in Data Mining

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Download Syllabus

Get Complete Course Syllabus

Enroll For Demo Class

It will take less than a minute

Tutorials

Interviews

You must be logged in to post a comment