Grab Deal : Flat 30% off on live classes + 2 free self-paced courses - SCHEDULE CALL

Data cleaning is the procedure of removing erroneous information from a data set, table, or database. This includes fixing or removing any data that is inaccurate, irrelevant, or otherwise flawed.

Data cleansing is crucial to your analysis in Data Science. It's a key component of the machine learning cycle's data processing and preparation stages. In this blog,You can check out data science online certification courses to learn more about the data cleaning methods used on real-world datasets as well as other data pre-processing stages and model-building phases of a data science lifecycle.

In Data Science or Data Mining, cleansing the data is an essential step. It's a crucial piece in the puzzle while making a model. Data cleaning is a necessary step that is often overlooked. In quality information management, data quality is the primary concern. Issues with data quality can arise in any kind of IT system. By cleansing the data, these issues may be addressed and resolved.

To "clean" data means to rectify or remove any errors, corruption, improper formatting, duplication, or incompleteness from a dataset. Results and methods are questionable, despite appearances of correctness, if the data used to generate them is flawed. There is a high risk of data duplication and mislabeling when merging data from several sources.

In most cases, cleaning up data will make for better information overall. Even though it might be a pain to go through and fix all the mistakes and delete the faulty records, you must do it. In order to clean up data, data mining is a crucial method. The process of data mining is one way that useful insights may be extracted from large datasets. As a relatively new method, data quality mining employs data mining techniques to root out and restore faulty information in massive datasets. Data mining is a process that automatically discovers previously unknown relationships between data sets. There are several data cleaning methods that may be applied in data mining. You can also learn about neural network guides and python for data science if you are interested in further career prospects of data science.

The only way to arrive at a reliable conclusion is to first comprehend and then fix the quality of your data. Information has to be organized so that meaningful patterns may be uncovered. Exploratory in nature, data mining is. In data mining, data cleansing is a prerequisite to obtaining useful business insights from previously erroneous or incomplete data.

Data cleaning in data mining is often a time-consuming procedure that necessitates the involvement of IT personnel at the first stage of reviewing your data. Without high-quality input data, however, your analysis may be imprecise or you may get the erroneous result.

In order to clean your data, you may follow these general procedures, however the specifics will vary depending on the sorts of data your firm holds.

The same or duplicate observations, or observations that provide no value, should be eliminated from the dataset. When gathering information, it is common to make the same observation twice. There is a risk of creating duplicate data when combining data sets from several sources, scraping data, or receiving data from customers or other departments. One of the most important factors to think about is deduplication. Whenever you come across data that doesn't belong in the context of the problem you're attempting to solve, you've made an irrelevant observation.

If you want to do an analysis on millennial consumers, but your dataset also includes information about customers from earlier generations, you might want to get rid of those. In addition to reducing the time spent on analysis and the number of potential distractions, these benefits also allow for a more manageable and workable dataset.

When doing a measurement or data transfer, if you detect any unusual naming conventions, typos, or wrong capitalization, you have encountered a structural mistake. These discrepancies may lead to incorrectly categorized groups. The "N/A" and "Not Applicable" notations that may appear in any given sheet should be grouped together for purposes of analysis.

Individual data points may look out of place at first sight. The efficiency of your data will improve if you get rid of anomalies when you have good cause to do so, such as when dealing with incorrect data entry.

But occasionally an anomaly may show up to validate your idea. And the existence of an extreme case doesn't prove that the norm is wrong. To verify the accuracy of the figure, this procedure is required. It may be necessary to eliminate an outlier if it turns out to be a mistake or if it is unimportant to the study.

Data Science Training

Business strategy and choices might suffer if they are based on inaccurate or "dirty" data that leads to erroneous assumptions. If you draw the wrong conclusion from your data, you may have to face the music at a reporting meeting. First, you must establish your company as one that values accurate records. One way to achieve this is to write down your definition of data quality and the methods you may use to implement it. Data science tutorial will help you to explore the world of data science and prepare to face the challenges.

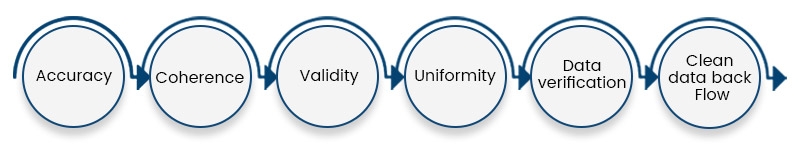

When it comes to data, most people believe that your insights and analyses are only as good as the data you use. Fundamentally, junk data equals rubbish analysis. The data can be processed through a variety of data cleansing processes. Here are the procedures:

You should check out these six stages of data processing to better understand the concepts.

If a company wants to be certain that its data is accurate, complete, and safe, it must engage in regular data cleansing. These might range in quality based on the specifics of the data at hand. The fundamentals of Data mining's data-cleaning process are as follows:

In the long run, improved productivity and better decision-making are the results of having clean data. Advantages include:

In data mining, cleaning the data entails the following procedures.

If you are unsure of your own data-cleaning abilities or just don't have the time to scrub every last bit of dirt from every spreadsheet, consider investing in a data-cleaning tool. These instruments may need an investment, but they are well worth it. The industry is flooded with data cleansing applications. A few of the best data cleansing applications are listed below:

The efficiency of your data will improve if you get rid of anomalies when you have reasonable cause to do so, such as when dealing with incorrect data entry. Does the information adhere to standards established in the field? Error tracking and improved reporting on the origin of malfunctions facilitate the correction of inaccurate or corrupt information in preparation for use in other contexts.

The data can be processed through a variety of data-cleansing processes. The method relies on the user supplying the missing value. When smoothing sorted data, the surrounding values are used. This blog should assist you in beginning the data cleaning process for data science in order to prevent having inaccurate data. Although cleansing your data can occasionally take a while, missing this step will cost you more than simply time. When you begin your research, you should ensure that the data is clean because dirty data can lead to a wide range of issues and biases in your findings. You can look at Data Science Certificate online to learn more about data processing techniques including data cleansing, data collecting, data munging, etc.

FAQs

1. What are The Examples of Data Cleaning in Data Science?

Data cleaning is the process of organizing and fixing erroneous, improperly structured, or otherwise disorganized data. People may provide their phone numbers in different formats, for instance, if you ask for them in a survey.

2. Which Method is Used for Data Cleaning?

Although it might be a time-consuming and laborious task, fixing data mistakes and removing incorrect information must be done. A crucial method for cleaning up data is data mining. A method for finding useful information in data is data mining.

3. What Makes Data Cleaning Important?

Data cleansing makes sure you only have the most recent files and crucial papers, ensuring easy access to them when you need them. Also, it ensures that you don't store a lot of sensitive data on your computer, which could compromise its security.

4. Is data Cleansing in Data Mining a Skill?

It is an important skill to know how to efficiently clean data. Data scientists would receive data from a number of government organizations and customer IT shops, so data cleaning was a crucial ability.

5. What Procedures are Involved in Data Cleansing ?

The majority of data cleansing procedures adhere to a common framework: Choose the important data points that are necessary for your analysis. Get the information you require, then sort and arrange it. Find and eliminate any duplicate or unnecessary values.

Basic Statistical Descriptions of Data in Data Mining

Rule-Based Classification in Data Mining

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Download Syllabus

Get Complete Course Syllabus

Enroll For Demo Class

It will take less than a minute

Tutorials

Interviews

You must be logged in to post a comment