26

JulGrab Deal : Flat 30% off on live classes + 2 free self-paced courses - SCHEDULE CALL

You might be here because you wanted to install Apache Pig on Linux. Thankfully, you have been reached to the right place today where step by step guide is given to complete the installation process precisely. Before we jump to the installation part directly, let us start with a brief introduction to Apache Pig first.

Apache Pig is a platform which is used to create and execute the programs of MapReduce that are utilized in Hadoop. Large data sets are analyzed through this tool. Generally speaking, Apache Pig can be said an abstraction over MapReduce.

The language used in Apache Pig is Pig Latin and the novice programmers use this language for executing and writing MapReduce programs, especially those programmers who are not good in Java language.

Pig Latin is basically a high-level language which is used for Apache Pig platform. The programs written in Apache Latin can be run on any platform, even over distributed database environment of the Hadoop File System or HDFS.

The scripts of Apache Pig are written in Pig Latin language and they are later converted to MapReduce job. There are various operators present in Apache Pig and they can be used to read, write and to process the data. These operators are called Apache Pig Relational Operators.

Apache Pig is one of the most popular tools that is frequently used by the Hadoop developers. If you want to install Apache Pig on Linux platform, then you need to follow proper steps for successful installation of Apache Pig on Linux.

To install Apache Pig on Linux platform, there are many pre-requisites. First of all, Hadoop and Java must be pre-installed on your machine. There is a complete procedure and set of steps to install Hadoop and Java on any Linux-based machine, you can follow these steps or Google the proper steps or installation guide for these tools.

After downloading it from the site, install Java and Hadoop and prepare the environment so that you can install Apache Pig properly on your machine. The link from where you can access the complete installation guide for Java and Hadoop is available on Google.

To download Apache Pig, you must select the version which you want to use for your requirement or you can download the latest available version of this tool. There is complete information available online about the various available versions of this platform and their compatibility with other platforms. Download the appropriate package for your need and install them later on. The MapReduce Accelerator support information is listed below:

Read: Chief Elements Of A Professional Hadoop Resume

Apache Pig versions which are supported by MapReduce Accelerators are:

You can take a note of your compatible version of Apache Hadoop, which you are using for Pig scripts. Apache Pig releases do not support Apache Hadoop default version that is 0.21.0, so one must download the supported version of MapReduce Accelerator. The Apache Pig version value is to be inserted as HADOOP_VERSION value while installing it on Linux platform.

Below is listed the procedure of installing Apache Pig on Ubuntu 16.04 version:

Step 1: From the link http://www-us.apache.org/dist/pig/pig-0.16.0/pig-0.16.0.tar.gz, you can download the latest version of Apache Pig. There will be an archived file with the .tar extension, you just need to download that particular file through the following command: Command: wget (site link) or http://www-us.apache.org/dist/pig/pig-0.16.0/pig-0.16.0.tar.gz

Step 2: Using tar command, you can extract the zipped file. Below is the complete syntax to extract and list the file -

Command: tar –xzf pig-0.16.0.tar.gz

Command: ls

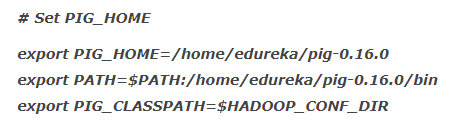

Step 3: You can update the environment variables of Apache Pig through “.bashrc” file. Here in this example, the value of this variable is set in the way so that it can be updated from any directory and to execute Pig command the user would not have to access pig directory each time.

Moreover, one can easily know the path of Apache Pig file, if some application wanted to know that. Following is the command to set the environment variable:

Read: Hbase Architecture & Main Server Components

Command: studio gedit .bashrc

You can add following commands at the end of the file:

Before updating the environment variable for Pig make sure that Hadoop path is also set.

Step 4: Now, this is the time to check the version of Pig just to ensure that Apache Pig has been installed properly. In case, if the version is not displayed properly then you may have to repeat the above-listed steps again.

Command: pig –version

Step 5: Check Pig help option to list all the commands available under the tab-

Pig -help

Step 6: To start the grunt shell, you should run Pig. Pig Latin script is run through Grunt Shell. Command: pig. Congratulations! The Apache Pig is installed correctly on your Linux OS. Now you must be able to see two execution modes where it can run –

MapReduce Mode and the Local Mode.

Read: Hadoop Hive Modules & Data Type with Examples

Let us discuss on each of the modes briefly.

Following two modes are available to execute Apache Pig on Linux environment:

There are various components of Apache Pig framework. The major components of Pig are listed below:

Final Words:

Hadoop is a popular framework used by database experts and there are a number of tools and technologies present in this framework. Due to the popularity of this platform, there are a number of jobs options available in the market and most of the developers are using this platform for Linux or any other operating system.

Here the Apache Pig framework is the best tool for the Linux OS. Installation of this framework is a step by step procedure, which is not that much difficult but in order to use it in the proper way the user will have to install it properly.

The above list of steps or installation guide has a detailed procedure and the user can easily follow this step by step process if he wants to use Apache Pig or Pig Latin script language of this platform to leverage the Hadoop platform. Apache website has the link to download the framework and the user can easily download it right from there. Even there is a help guide also available for his platform.

To learn more about Hadoop and Apache, you should start with the Training and Certification program at JanBask right away. The right learning not only boosts your knowledge and gives a new direction to your career that is actually required to become successful and established in your near future.

Read: Top 20 Apache Solr Interview Questions & Answers for Freshers and Experienced

Pinterest

Pinterest

Email

Email

The JanBask Training Team includes certified professionals and expert writers dedicated to helping learners navigate their career journeys in QA, Cybersecurity, Salesforce, and more. Each article is carefully researched and reviewed to ensure quality and relevance.

Cyber Security

QA

Salesforce

Business Analyst

MS SQL Server

Data Science

DevOps

Hadoop

Python

Artificial Intelligence

Machine Learning

Tableau

Search Posts

Related Posts

Receive Latest Materials and Offers on Hadoop Course

Interviews